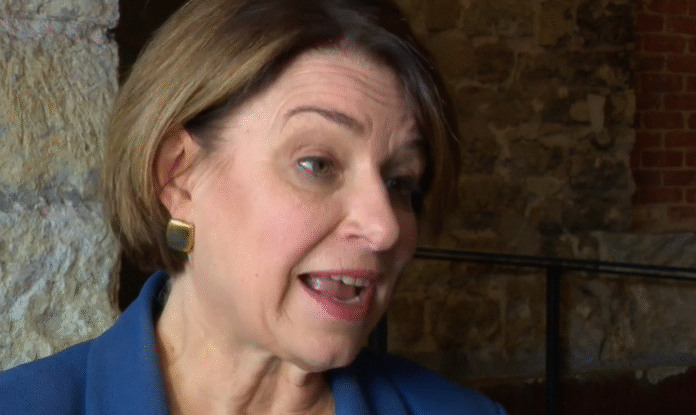

Minnesota Senator Amy Klobuchar is raising alarms about the dangers of artificial intelligence after she became the target of a fake video that used AI to mimic her voice.

In a recent opinion piece published in The New York Times, Klobuchar explained how someone created an AI-generated video that falsely featured her speaking in a “vulgar and absurd” way. The video was shared online and made it appear as though she was criticizing an advertisement.

Klobuchar said what disturbed her most was not just the existence of the deepfake, but the struggle to get it taken down. The video, she said, had borrowed elements from an actual Senate hearing in July, which made it look even more convincing.

“For years I have been working to address the growing problem of deepfakes, but this experience made it clear just how powerless we are right now,” Klobuchar wrote. “I spent hours trying to stop the spread of a single video and realized how few tools exist for victims of these fake digital attacks.”

A Growing Threat

Klobuchar stressed that her case is just one example of a much larger issue. Deepfakes — videos, images, or audio created using AI to imitate real people — are spreading quickly online and being used to mislead the public.

Last month, for example, another imposter used AI to copy the voice of U.S. Secretary of State Marco Rubio. According to reports, the fake voice was used to call foreign officials, a member of Congress, and even a state governor, pretending to be Rubio.

“These are not just silly internet pranks. They are dangerous tools that can be used to manipulate elections, spread false information, and harm reputations,” Klobuchar warned.

Push for New Laws

To address this problem, Klobuchar is urging Congress to pass her proposed legislation known as the NO FAKES Act. The bill would create clear protections for people whose voices or images are stolen by AI. It would also establish a process for victims to demand that social media companies remove unauthorized deepfakes.

She also wants stronger rules requiring companies to label content that has been substantially created or altered by AI, so viewers can tell what is real and what is not.

“In the United States, within the bounds of our Constitution, we must adopt common-sense safeguards for artificial intelligence,” she wrote. “At the very least, people deserve to know when they are looking at or listening to something that has been generated by AI.”

Minnesota’s Example and the Legal Battle

Klobuchar pointed out that Minnesota has already passed a state law making it illegal to share AI-generated material connected to elections or sexual acts. The law was designed to protect voters and individuals from being misled or humiliated by deepfakes.

However, the law has faced pushback. In April, Elon Musk, the owner of X (formerly Twitter), sued Minnesota Attorney General Keith Ellison, arguing that the law violates free speech rights and could result in censorship of political speech. That legal fight is still ongoing.

The Need for Urgency

Klobuchar argues that without national action, Americans will remain vulnerable to this fast-moving technology. She believes the issue goes beyond politics and touches on democracy, personal safety, and trust in information.

“If a U.S. senator can be targeted and still struggle to get one deepfake taken down, imagine how powerless ordinary people feel,” she said. “We cannot afford to wait until the damage is irreversible. The time to act is now.”

As AI becomes more powerful and widely available, Klobuchar says the U.S. must balance innovation with accountability. For her, the lesson is clear: technology should serve the public, not exploit it.